- by John Luxford

This weeks' update has a number of features we're really excited to share, so I'll jump right in!

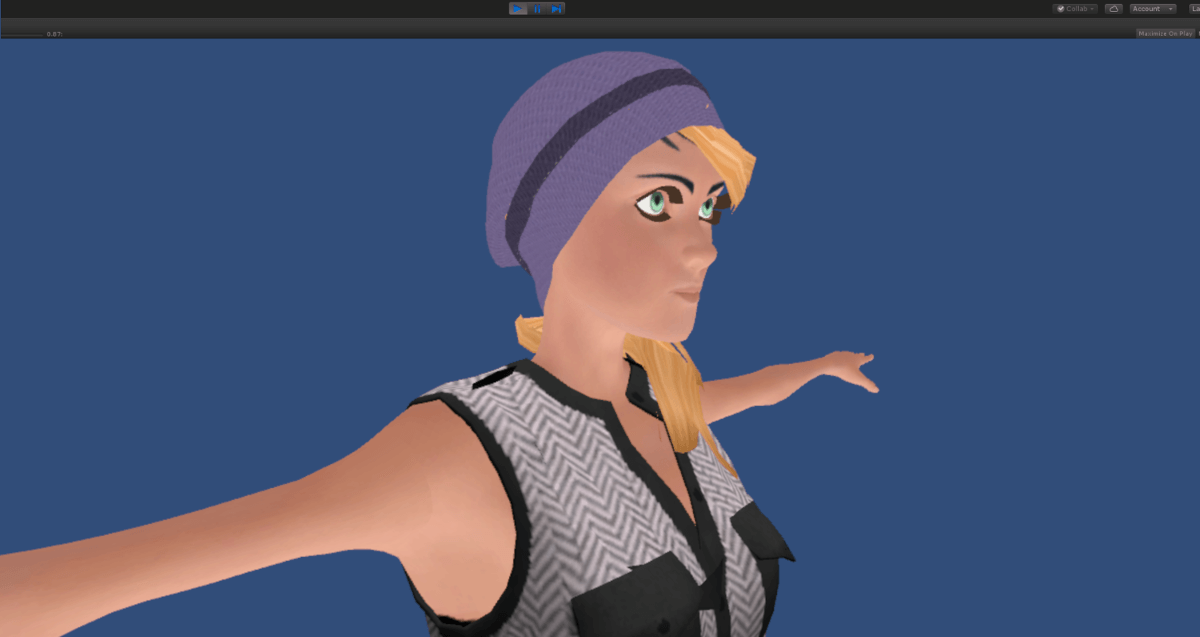

Jess, Phillip, and Meg round out our set of default human characters, and they couldn't be happier to join the others in Flipside to help you make your shows.

We were having issues with textures loading properly on the custom props being imported, which hampered the usefulness of our custom prop importer. We've made some big improvements that should make this much more useful.

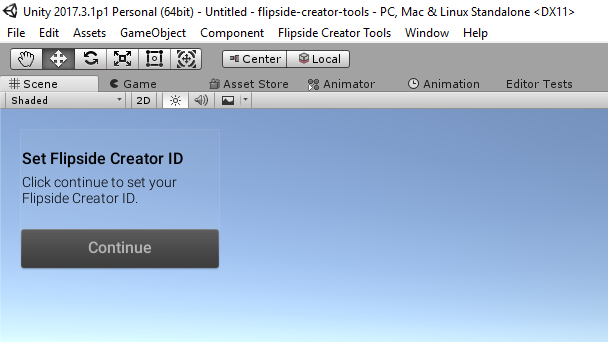

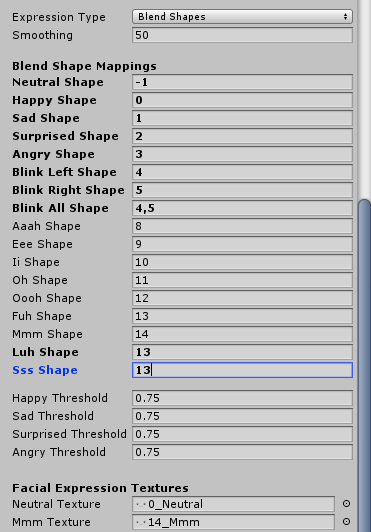

The Flipside Creator Tools got a massive overhaul with this update that we've been working on for the past few weeks. In addition to fixing some issues with shader importing, there are three big areas of improvement:

1. The workflow has been made much simpler, with a new panel placed front and center that walks you through each step in the process.

2. You no longer have to match Flipside's exact list of blend shapes for facial expressions and lip syncing, because the Creator Tools now let you map our list to yours, including skipping missing blend shapes and mapping one of ours to multiple blend shapes on your characters. This means less time tweaking your characters in Blender before bringing them into the Creator Tools.

3. You can now hit Play in the Unity editor with your character's scene loaded and then you can hop into VR to see your character standing in front of you to check for scale and other issues.

This is just the start, and we have lots more planned to make character creation faster and more fun.

I mentioned we were working on a public roadmap in the last update post, and that's ready now too! Head over to our Roadmap page to see what we're currently working on, what's already completed, and what is coming up on the road to Flipside Studio 1.0. There's also a Roadmap link in the Creator Community so it's always just a click away from the discussions.

Try it out, then head over to the Creator Community and let us know what you think!

- by John Luxford

Starting with last week's update, we decided to try for weekly updates as we approach Flipside Studio 1.0.

With that in mind, each update will be smaller and mainly focused on these areas:

We're also working on a public roadmap that we can share with the community to better understand where we're going, not just for 1.0 but for future versions as well.

Ruth and Steve (internal names only, since Flipside characters don't have visible names) are the newest members of our built-in character set. They're an elderly couple who just don't understand what all the fuss is about with this new technology.

One big usability change with this update are objects that you hover over will highlight if they're grabbable. We notice a lot of newcomers to VR don't reach out far enough to grab things, so this is meant to provide a visual cue to show that you're within reach. This should also help to know which object you're grabbing when several objects are close together.

As always, the updates should happen automatically via Oculus Home or Steam. Looking forward to seeing your feedback and creations in the Flipside Creator Community!

- by John Luxford

Hot on the heels of update #2, we have a fresh batch of bug fixes for our Flipside creators. You should see the latest version automatically updated on Steam or Oculus Home.

The slideshow had some issues playing video files, which should now be fixed. For users that still have issues, see our Installing Flipside help page for a link to download additional video codecs from Microsoft.

In the process of getting our Flipside Creator Tools ready for everyone to import their own characters, we ran into some snags that left our eyes less expressive than they used to be. Eyes should once again be naturally looking at points of interest, which we call eye targets. This includes other characters as well as things like the coffee mug, which the eyes will focus on briefly as the cup is picked up.

It used to be white. Now it's black. Watching how people are using Flipside, this was a small change that should have a big positive impact :)

There were several other behind-the-scenes fixes, including updating the project and Creator Tools to Unity version 2017.3.1p1. If you're using the Creator Tools to import your own characters, you'll need to ensure that you're using that version of Unity or you may run into issues like your character's textures showing up wrong. You can find the correct version of Unity on their Patch Releases page.

As always, please post any fixes, feature suggestions, or things you create over on the Creator Community.

- by John Luxford

We're excited to share our second big update to the Flipside Alpha! Existing users should see the latest version automatically updated on Steam or Oculus Home.

This update features too many little improvements to name them all, but here are the highlights:

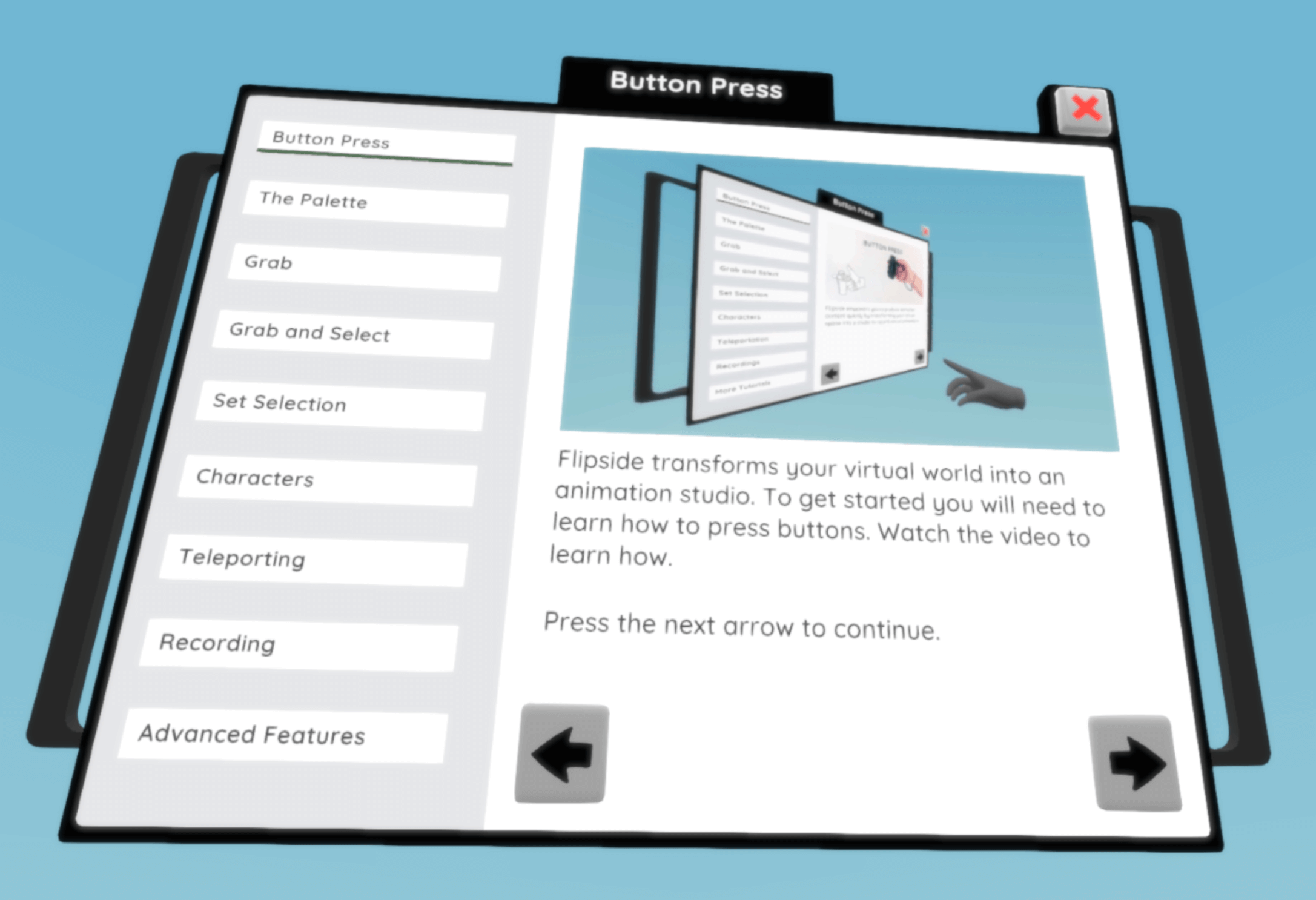

We spent a lot of time designing an in-app tutorial system to help new users get started and to make sure existing users have a clear overview of Flipside's controls and interface.

A performance optimization we made in the last update caused audio playback to be off by a small but noticeable amount. We're happy to say this is now completely fixed.

When you have lots of green on a set or in your characters, it can be hard to key them out correctly using a green screen. We added a blue screen option to the skies so you now have a choice of green or blue for keying out your backgrounds.

Dropbox links are now automatically converted to the direct photo or video link, so you can paste Dropbox links into the slideshow and everything will just work.

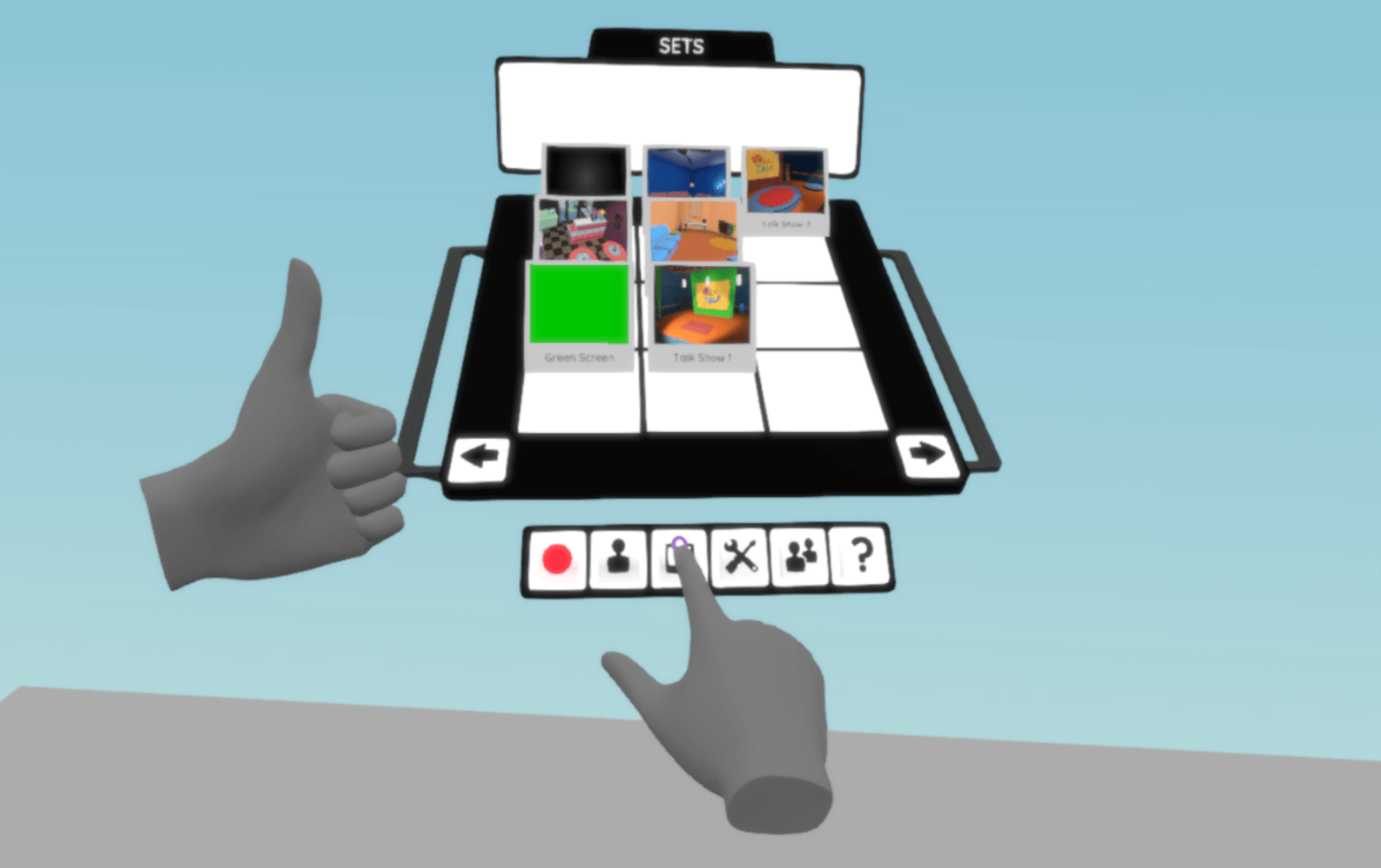

We completely redesigned your hands so it's easier to press the right button and interact with objects.

Some bug fix highlights:

- by John Luxford

Our first big update to the Flipside Alpha is out! Alpha users will see the option to update on the Flipside Steam page and in the Oculus Home app (or if you have auto-updates enabled, you should automatically have the latest version).

Since this is a big update with lots of improvements, let's start with the highlights first:

Based on your feedback, we made several changes to the user interface:

This felt too video game-like and also had accessibility issues for single-handed use.

As a result, the following user interface elements have been added:

This falls in line with many other VR apps and games, and should make it easier for users to pick up.

We created a utility belt (more like floating holsters) by your waist where you can always grab these common tools.

The palettes have also been unified to act like a single menu system for the whole app.

This helps distinguish the Flipside user interface from show elements like props and sets.

To stop you can either press the usual A/X on Oculus Rift or Application Menu on HTC Vive, but you'll also see a wrist watch appear on your left wrist while recording is underway that has a stop button on it. We decided to keep that bit of skeumorphism :)

We've released the first public release of the Flipside Creator Tools, which enable you to import your own custom characters from 3D models, complete with full-body movement, hand animations, facial expressions, lip syncing, and natural eye movement. Now you can be anything you can imagine in Flipside!

We've added a calibration button found on the underside of the Characters palette. This measures and stores your height, shoulder height, and arm span and uses these to provide more accurate motion capture.

The first alpha release had a bug where your character's feet would lift too easily off the floor. The calibration as well as some other character changes have made big improvements to how the feet feel and connect with the floor.

Looking up, down and all around doesn't cause your character's body to move nearly as much, which helps convey body language that much better.

Flipside now supports both 2 and 3 Vive Tracker configurations, tracking either both feet or both feet and your waist. This opens up whole new possibilities for physical acting in Flipside.

The handheld camera had a bug where it wasn't projecting to the 2D output, which made it impossible to capture handheld shots. Now whenever you grab the handheld camera from your side, it will become the active camera.

The handheld camera defaults to facing outward. A bug had it defaulting to selfie mode, but this makes the camera way more useful for quickly capturing the right shots for your shows.

We've improved the handheld camera's steadicam smoothing effect, helping you capture more stable footage of your shows.

We can't wait to hear your feedback on these changes and see what you guys make in Flipside!

- by John Luxford

This year started with the decision to focus exclusively on Flipside as a company. That was a hard decision because up until that point we were a bootstrapped company relying on service-based income to survive. It was even harder because it meant deciding to cancel our then-imminent plans to release Lost Cities on the Oculus Rift. But far and away it was the right decision.

We were just starting the art production on our first Flipside show, Super Secret Science Island, in collaboration with the super creative comedy duo Bucko. Super Secret Science Island is an improv comedy set on a deserted island which really stretched our multiplayer and avatar capabilities. It also taught us reams and reams about what actors need to perform well in a virtual environment (see our 3-part acting in VR series).

We had also just been accepted into Tribe 9 of Boost VC, a startup accelerator focused on frontier tech companies like us working in areas like VR, AR, AI, and Blockchain. Boost VC believing in our vision was all the proof we needed that we made the right move. Throughout the program, we made some amazing relationships and connections, learned a metric ton, and moved our product forward by leaps and bounds.

While at Boost VC, we were connected with San Francisco comedian Jordan Cerminara, who became the writer and actor in our second Flipside show, Earth From Up Here. This show made extensive use of our camera system, our teleprompter, and our slideshow for delivering SNL Weekend Update-style news. In the show, Jordan plays Zeblo Gonzor, an alien newscaster who makes jokes about how crazy Earthly news must seem from the outside.

Having produced a complete season of two shows, we went back to the drawing board and determined what it would take to provide the same experience for creators who could work on their own, without our help troubleshooting issues on the fly. We talked to lots of creators, and really honed our vision for what Flipside 1.0 ought to be.

We also demoed Flipside at a ton of events, from All Access to Vidcon, grew into our very own office space from our previous co-working spaces, and also grew to a team of seven. Compared to the year before, having a whole team working on a single shared vision has been amazing, to say the least.

And now, just in time for the holidays, we're sending our first Flipside Early Access release out into the wild to our first group of beta testers, warts and all.

They say if you're not at least a little embarrassed about showing your app to the world you've waited too long. I wouldn't say that we're embarrassed because we're all immensely proud of the work and creativity that's gone into this release, but there's a list a mile long of things we can't wait to fix or add in.

2017 has been the craziest year yet for us, and ending it by getting Flipside into the hands of its first users feels like exactly how it should end. I expect 2018 to be even crazier, with more beta updates, a budding new creator community to grow, a wider public release and more content in the works.

We'd like to end with a huge THANK YOU to everyone who has been along for this ride, and who have supported us in any way this past year. Flipside is the most ambitious and creative thing I think any of us have attempted to make, and it wouldn't be where it is today without you.

And another huge THANK YOU to our creator community who have waited patiently for us to get Flipside into your hands. The desire to help people share their stories has been at the heart of our company from before the beginning, and we can't wait to see what all of you come up with!

Sincerely,

The Flipside Team

PS. Have a safe and warm holiday, and an inspired new year!

- by John Luxford

By Rachael Hosein (CCO / Co-founder) & John Luxford (CTO / Co-founder)

The Winnipeg Winter Game Jam was this past weekend, which conveniently overlapped with the itch.io xkcd Game Jam, so we chose to make something for both.

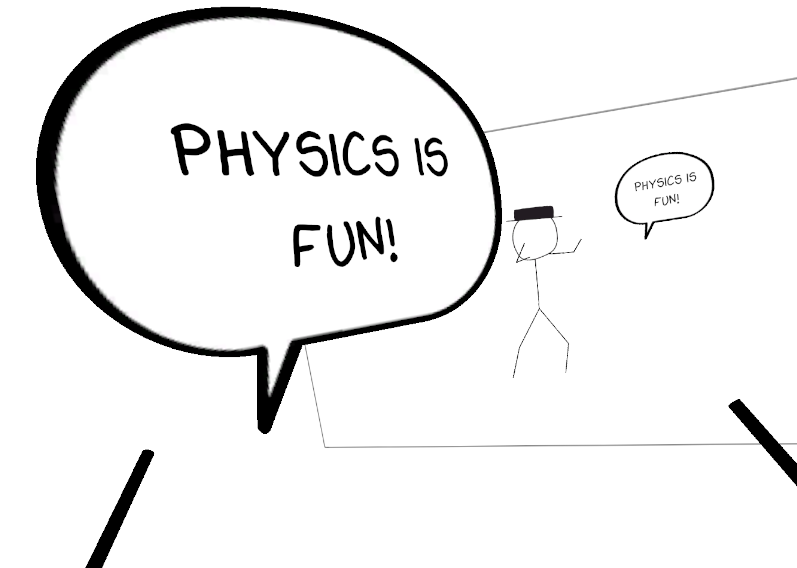

The result is xkcd vr, a virtual reality experience that lets you be all the characters from the xkcd web comic in VR using the same motion capture tech found in Flipside.

The first step was to see what being a stick figure avatar felt like in VR, which it turns out is ridiculous fun! From there we wanted to let people make their own comic cells with speech bubbles and props and save them as images that matched the xkcd style.

We did have to deviate from the style in some places, like adding outlines to our speech bubbles because without outlines they were harder to grab and place. But overall, we're pretty proud of how well we were able to match the look and feel.

Here are the features we managed to finish over the course of the jam:

This was a super fun project that we'd love to incorporate pieces of in Flipside proper. Imagine making your own animated shows as the xkcd characters in a web comic world? How cool is that?

You can download xkcd vr for Oculus Rift and HTC Vive here. We hope you enjoy it as much as we enjoyed making it, and feel free to reach out - we'd love to hear what you think!

- by John Luxford

“The changes are dynamic and take place in real time. The show reconfigures itself dynamically depending upon what happens moment to moment… It’s a smart play.”

– Neal Stephenson, The Diamond Age

By John Luxford, CTO & Cofounder - Flipside

We started Flipside out of a shared passion for using technology to empower people to be creative and to tell their stories. We love building creation tools, and our mission is to enable a new generation of creativity using VR and AR, or as people have been dubbing the collective Virtual/Augmented/Mixed Reality combo, just XR.

When we started Flipside, we reflected a lot about where we saw these technologies going. We wondered: what will kids growing up in a time when AR headsets are commonplace expect from their apps?

If we can understand this, we can avoid building things that seem novel today but won’t have staying power. We call this target user The XR Generation.

Some key insights emerged from these reflections:

Just like our kids don't share our passion for the movies and shows we loved growing up, the next generations will prefer content made for their generation.

We're just starting to see the potential of 3D content today, but this content consumption pattern led us to the realization that as display resolutions improve and miniaturization lets hardware reach a consumer-friendly level of style, 3D content will overtake 2D content at some point in the future. At that point, kids will all want to wear XR glasses, because everyone else will be doing it and they won't want to miss out on sharing that experience.

2D content will still be a part of that experience, but it will simply become a virtual flat surface in a larger 3D context. And the reason users will prefer real-time rendering over pre-rendered content like stereoscopic 360 video is because real-time content can be interacted with. A 2D screen or even a 360 video just can't compete on that front. And as interactions become increasingly physical at the same time, the level of engagement will be profoundly different than today's touchscreen world.

Video games are today's 3D content rendered to a 2D screen, and the immersion is lacking. The content never feels like it's part of your world, and you never feel truly transported to another world, because there's a flat piece of glass always in the way. High resolution VR and AR will enable true immersion and physical interaction with the games and entertainment people consume in the future, and today's video games will seem antiquated by comparison, just like our favourite movies growing up seem to kids today.

The XR Generation will expect that they’ll be able to invite their friends to join them in any experience worth their time, and that it will be a rich and expressive shared experience.

The video game industry is already bigger than the movie industry, and games like Night in the Woods keep showing us how interactive storytelling can tell deeply personal and human stories while giving the player agency to explore the game's world and go where they choose, and to feel a stronger affinity with the characters they become while playing.

Like Neal Stephenson’s vision of “ractives” in The Diamond Age, we see the line between performer and viewer blurring until they’re almost indistinguishable. Not every piece of content will necessarily be fully interactive, but new forms of interactivity will emerge that haven't even been imagined yet.

Users already expect some degree of agency in their virtual worlds, and they will feel a need to participate in the creation of the impossible things that they are going to experience. Humans are born curious and unafraid to express their creativity, and that creativity is the key to the process of discovery and learning about the world.

If you can think it, why can't it become virtually real? And with advancements in haptic feedback, it may feel just as good as the real thing, too.

The idea of a single metaverse that everyone visits sounds great in sci-fi novels, but doesn't quite add up in practice.

There will be countless virtual worlds, not just one. But we can only interact with so many people at one time, and the intimacy of interaction is what brings it much of its inherent value.

We anticipate that there will be hugely popular metaverse-like apps, but no one app will be able to satisfy everyone's creative and entertainment needs.

Individual games will run outside of that metaverse, even if you launch them from inside of it and end up back there later, like Steam or Oculus Home today. With AR, there won't be a need for a metaverse at all, just for pieces of a metaverse like a unified avatar system.

For these pieces that make up a metaverse, standards will likely emerge just like we've seen on the web: your avatar will go with you from world to world or place to place; there will be an operating system which acts as a way of organizing and launching your XR apps just like we have now, and glues the various standards together to make a whole, but it won't be where users spend most of their time.

The key to realizing this long-term vision is to build the features necessary to bridge the gap between what is possible today, and what will become possible as the technology matures. This means providing real value to creators and viewers now, and building the future out in careful steps, which leads us to the following axioms.

360 video is going to get much better, but it still has inherent limitations like the viewer being stuck in a fixed point in the scene, and will never be fully interactive in the way a real-time rendered scene can be. Lightfield technology may get us closer to interactivity, but it has its own limitations too and is still years away from hitting the market.

Today's real-time rendering is also limited in its ability to render truly lifelike scenes, but this is improving rapidly and won't be a problem in the future we're talking about. Nothing but real-time rendering can provide users with an immersive as well as interactive experience, allowing them to affect its outcomes.

We're focusing on real-time now, because it aligns perfectly with the way we see the XR content of the future being consumed.

We are not building the metaverse. We are intentionally building a specific show production and viewing app for actors and viewers, centered on their needs.

This means that actors need to have the tools to help them act, and viewers need to have the tools to engage with the content. It's our job to take care of the rest, which should largely remain unseen to both sides.

Live shows and single-take recordings make production faster today, and allow for real-time engagement.

The easier we can make producing content, the more content can be produced, which provides content creators with a tangible benefit today, and helps us get to a stage where the audience jump inside of, and become part of, the show itself.

We started Flipside as a multiplayer experience from day one. This helps us in achieving axiom 3, because multiple users can act together in real-time, without the need to jump between characters over a series of takes.

It also helps us push ourselves when it comes to the expressiveness we want in our avatars. It's one thing to act a part and watch it back, but it's another to see someone in front of you, and assess in real-time whether their expressions are being sufficiently conveyed.

Since VR and AR are new mediums, we know that the best way to accelerate the pace of discovery is to bring as wide of a variety of content creators into them as possible. Because Flipside is a social show production tool, users can craft simple shareables or more elaborate live and recorded productions like comedies, dramas, and game shows.

An engaged audience comes back.

Our social interactivity framework allows each show to have a game element or custom activity that creates unique levels of engagement on a per-show basis.

While these are simple interactions in the beginning, they will become increasingly varied and rich over time as more creators use Flipside to create new worlds, stories, and experiences. This axiom comes more from live performance and theatre than from television or movies, which we explore in further detail in Les' post Virtual Reality Will Disrupt the Stage.

From the beginning, we understood the value of onboarding teams and scaling production.

Together, we are building the future of entertainment, and we’ve only just scratched the surface of what’s possible. We envision Flipside as nothing less than the technology that powers interactive entertainment of the future, something that empowers millions of creators to reach billions of viewers, not that those distinctions even make sense to the XR Generation to come.

If you want to join us on this creative journey, make sure to sign up for early access to Flipside.

- by John Luxford

By John Luxford, CTO & Cofounder - Flipside

I had my first visit to Austin, Texas for Unity’s Unite conference which ran from October 3-5, and wanted to share some highlights from the amazing week I had there.

Neill Blomkamp, maker of District 9 and Chappie, has been collaborating with Unity to produce the next two short films that are part of Unity’s Adam series. The series is meant to demonstrate Unity’s ability to render near lifelike animated content in real-time, and it is just beautiful.

The first of the two new short films is called Adam: The Mirror, which was shown during the Unite keynote just before Neill Blomkamp was invited on stage to talk about the differences moving from pre-rendered CG to real-time, and how his team felt like it was almost cheating because you no longer have to wait for each frame to render before watching the results. It was super cool to hear him speak, having been a big fan ever since I first saw District 9.

It’s always a pleasure to hear Devin and Alex from Owlchemy Labs talk about VR, not just because they’re super entertaining, but because of the careful consideration they bring to each aspect of design and development.

This talk deconstructed their Rick and Morty: Virtual Rick-ality game, showing how they solved issues like designing for multiple playspace sizes, 360° and 180° setups, making teleportation work in the context of the game, and designing interactions for a single trigger button control scheme.

They also showed a spreadsheet of the possible permutations that the Combinator machine lets players create, and it reminded me a lot of their talk about Dyscourse having around 180 separate branching narratives all weaved together. Sometimes to solve the hard problem the right way takes an awful lot of hard work.

I arrived in Austin just in time to head over to the Unite Pre-Mixer put on by the local Unity User Group. As I was walking around the room going between the drink station, the various VR and AR demo stations, and chatting with the occasional person, I hear a “Hey you, you look like you’re just wandering around. Come talk to us!” from someone who then introduced himself as Byron. We all had a great chat, and it was a nice welcome into the Unite community.

Fast-forward a day and I head back to my Airbnb around 1am after cruising 6th Street with some friends I ran into / met that day, and sitting on the couch is a guy who introduces himself as Mike who just came back from Unite as well. Awesome! So we get to talking, and about 15 minutes later Byron walks into the house, looks at me, and says “I didn’t know we were roommates!”

We all laugh about it, find out we’re all Canadian too, and stay up until around 4am laughing and telling stories. Couldn’t have been a better living situation to find yourself in for your first Unite :)

I got invited to participate in a roundtable with a handful of other developers to share our thoughts on how Unity can better support AR development. It was an honour and super fun too! We talked about so many aspects of both AR and VR, challenges with input limitations, tracking limitations, trying to create useful apps instead of short-term gimmicks, and lots more.

This highlighted for me how receptive Unity is to learning from its community of developers and artists. Coming from the open source world, you can really see which projects listen to their user base, and which ones assume the user must have done something wrong. The bug couldn’t possibly be our fault.

It’s very encouraging to see Unity fall squarely on the right side of that cultural divide, and is something I felt echoed in each conversation I had with Unity’s developers over the course of the week.

Now I can’t wait for Unite 2018. I look forward to learning lots of new things again, and seeing many familiar faces. Thanks to everyone who made my first Unite such an awesome one!

- by John Luxford

By John Luxford, CTO & Co-founder - Flipside

This is part 3 of our blog post series about acting in VR and working with actors in virtual environments. Here are the two previous posts:

Now that we’ve explored some general lessons learned as well as lessons by actors for actors wanting to act in VR, here are some of the more technical discoveries we've made that can have a big impact on the quality of your final output.

Actors need to respond quickly to verbal and physical cues from the other actors present, as well as to changes in the environment. This is not a problem in the real world because there is no latency between the actors who are present in the same physical space, but actors over multiplayer are always seeing each others' actions from the past. This is the latency between them.

In a virtual space, latency is impossible to avoid. Even the time it takes for an action taken by the actor to be shown in their own VR headset can be upwards of 20 milliseconds. Remote actors will see each other's actions with latencies of 100 milliseconds or greater, even over short distance peer-to-peer connections.

Depending on the distance and connection quality, that can be as much as half a second or more, in which case reaction times are simply too slow. Past the 100 millisecond mark, actor-to-actor response times can degrade quickly, making the reaction to a joke fall flat, or creating awkward pauses similar to those you see on a slow Skype connection. For this reason, a virtual studio needs to be designed to keep latency to an absolute minimum.

Fortunately, a virtual studio doesn't have a lot of the same requirements video games have that make peer-to-peer connections disadvantageous. For example, the number of peers is going to be relatively low, and you don't need to protect against cheating, or waiting for the slowest player to catch up before achieving consensus on the next action in the game. So for VR over shorter distances, peer-to-peer is a better option than to use a server in the middle (although a server can often decrease latency over greater distances because of the faster connections between data centers).

Buffering needs to be minimized as much as possible too. Minimal buffers also mean the system can't smooth over network hiccups as easily, so a stable and fast network connection is needed at both ends.

A great way to keep latency to a minimum is to make sure the actors are physically located close together, preferably connected via Ethernet to the same network.

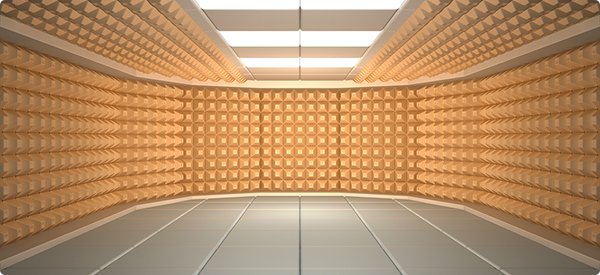

If you're recording a show with multiple actors in the same physical space, soundproofing between them becomes critical because the microphones in each VR headset can pick up the other actors's voices, causing issues with lip syncing where the lips move when an actor isn't speaking, or even hearing one actor faintly coming out of the other actor's mouth.

Even hearing feet on the ground, or the clicks from the older Oculus Touch engineering samples, can be picked up and become audible, or cause the character's lips to twitch. Wearing socks and using the consumer edition of the Oculus Touch controllers can make a big difference.

In-ear earphones are also key for ensuring voices don't bleed through from the earphones into the microphone of the wrong actor as well.

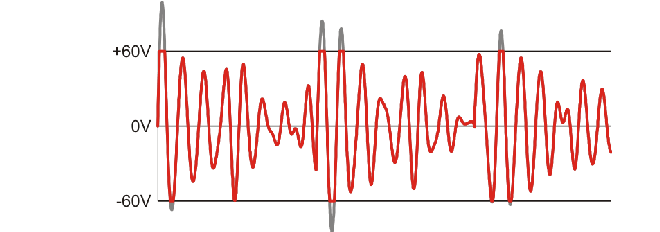

On the simplest level, this means adjusting the VR headset microphone levels in the system settings so that the voices at their loudest aren't clipping (e.g., causing audio distortion). It also means getting the audio mix right between the actors, the music, and other sound effects.

Clipping in a digital audio signal.

For traditional 2D output, a spatialized audio mix is not ideal either, since that means the mix will be relative to the position and direction of the local actor's head in the scene. For this reason, a stereo mix is important if you're recording for 2D viewers, but with Flipside we built a way of replaying the spatialized version in VR while outputting to stereo while recording.

Another challenge is that VoIP quality voice recording is substandard for recorded shows, by about half. Because higher frequency sound waves move faster than lower ones, a 16kHz sample rate is too slow to capture the higher frequencies of an actor's voice, losing detail and leaving them sounding muffled.

This ceiling where voice stops being captured properly is around 7.2kHz, but to capture the full frequency range of a voice you want to capture everything up to 12kHz, or even higher. But this is a trade-off between quality and the size of audio data being sent between actors. If the data is too large, it can slow things down, adding to the latency problem.

There are pros and cons to both platforms, and while both are amazing platforms in their own right, which one is anyone's favourite usually comes down to personal preference.

That said, the Oculus Rift with Touch Controllers has certain advantages and the HTC Vive has other advantages too, for the purposes of acting.

On the Oculus Rift, the microphone generally sounds better, and the Oculus Touch controllers offer more expressiveness in the hands, as well as additional buttons which allow for control of things like a teleprompter or slideshow in the scene. We've found the Oculus Touch's joystick easier for precision control of facial expressions than the thumb pad on the HTC Vive controllers, and the middle finger button easier to grab with than the Vive's grip buttons.

On the other hand, the HTC Vive's larger tracking volume is much more ideal for actors looking to move around, although a 3-sensor setup can easily achieve a sufficient tracking volume for Oculus Rift users. The Vive also wins on cord length from the PC to the headset, and the Vive trackers are awesome for doing full body motion capture!

![]()

After working with professional actors in Flipside Studio for the past few months, it really opened our eyes to the subtle balance needed to provide an environment they feel not just comfortable acting in, but inspired to be in too.

We're glad we could share what we've learned with you, and as we continue seeking out new actors for our Flipside Studio early access program, we hope these lessons will inform creators and help you create better content, faster.