- by Lux

It is with both gratitude and heavy hearts that we announce the shutdown of the Flipside virtual reality app on November 21st, 2025.

From the very beginning, our vision was to create a new kind of social experience — one where connection through creativity and performance could thrive in virtual space. What made Flipside truly special wasn’t just the technology, but the incredible community of users who brought it to life. Your dedication, creativity, and belief in what we were building have inspired us every step of the way.

Our team poured passion and imagination into Flipside, working tirelessly to make the metaverse a place where art and human connection could shine. Together, we pushed the boundaries of what social VR could be, and we are so proud of everything we accomplished together.

From early shows like Earth From Up Here with Jordan Cerminara and Pixels with Stephen Sim and Caity Curtis, to Live From the 8th Dimension with Caffeine.tv and The Galaxicle Implosions with FANDCO, and so many more, your creativity truly pioneered the first generation of immersive entertainment and showed the world what was possible. Far beyond what we ourselves had originally envisioned.

We tried to keep this vision alive for as long as we could in a challenging emerging market. While this chapter is coming to an end, we remain convinced that the world needs a platform like Flipside — a space where people can create, perform, and connect in new and meaningful ways. The future of virtual entertainment is still unwritten, and we believe social metaverse platforms centered around creativity will play an important role in continuing to shape it.

Thank you for being part of this journey with us. Flipside could not have existed without you, and we’re deeply grateful for every performance, every collaboration, and every moment you shared with us in our world.

With gratitude,

The Flipside Team

- by Lux

Video recording is as easy as adding a camera then clicking Record Video on the camera switcher.

Record social media posts, YouTube videos, play-throughs, or anything else you can think of.

And the best part is it supports ALL of Flipside's advanced camera features:

On Quest, you'll find your videos saved directly to the Gallery app and you can sync them straight to the Meta Horizon mobile app.

On PC, you'll find your videos saved in the Documents/Flipside/Videos folder.

We also just announced the winners of our 1st Annual Flippy Awards! Congratulations to our dedicated creators, and a big thank you to everyone for making Flipside the special home of creativity that it is.

Here is the list of this year's winners:

Go give them a subscribe and check out their content in Flipside today!

We've also fixed several bugs related to character scale, walking, and sitting. These fixes make big improvements to multiplayer and playback consistency.

Find all of the information from this update here.

Quest Store

Quest Store

Pico Store

Pico Store

It's back-to-school time, and we know that instructors are getting geared up to provide future innovators with the skills they need to succeed. Are you an educator interested in using Flipside in the classroom? Join our educators' mailing list and let us know.

Thanks to everyone who notified us of bugs and shared feature requests! We love the ideas and appreciate when you share your feedback.

CLICK HERE TO FILE A BUG REPORT

We’d love to welcome you to the Flipside Studio Community Discord channel where you can connect with other creators, share your #MadeInFlipside creations, submit feature requests, and share bug reports.

Join the Community

Join the Community

- by Lux

Flipside 1.3 is out today and introduces the ability to scale any character to be anything from super tiny to absolutely huge, and we're sure you're going to have a ton of fun with it.

This opens up so many new creative possibilities for our users, from the Honey, I Shrunk The Kids variety to Godzilla-level out of this world. Combine that with any character you choose and the possibilities are endless!

To change a character's scale, open the Characters menu and use the +/- buttons in the Scale section under the character preview mirror. Click Reset at any time to change back to the character's original scale.

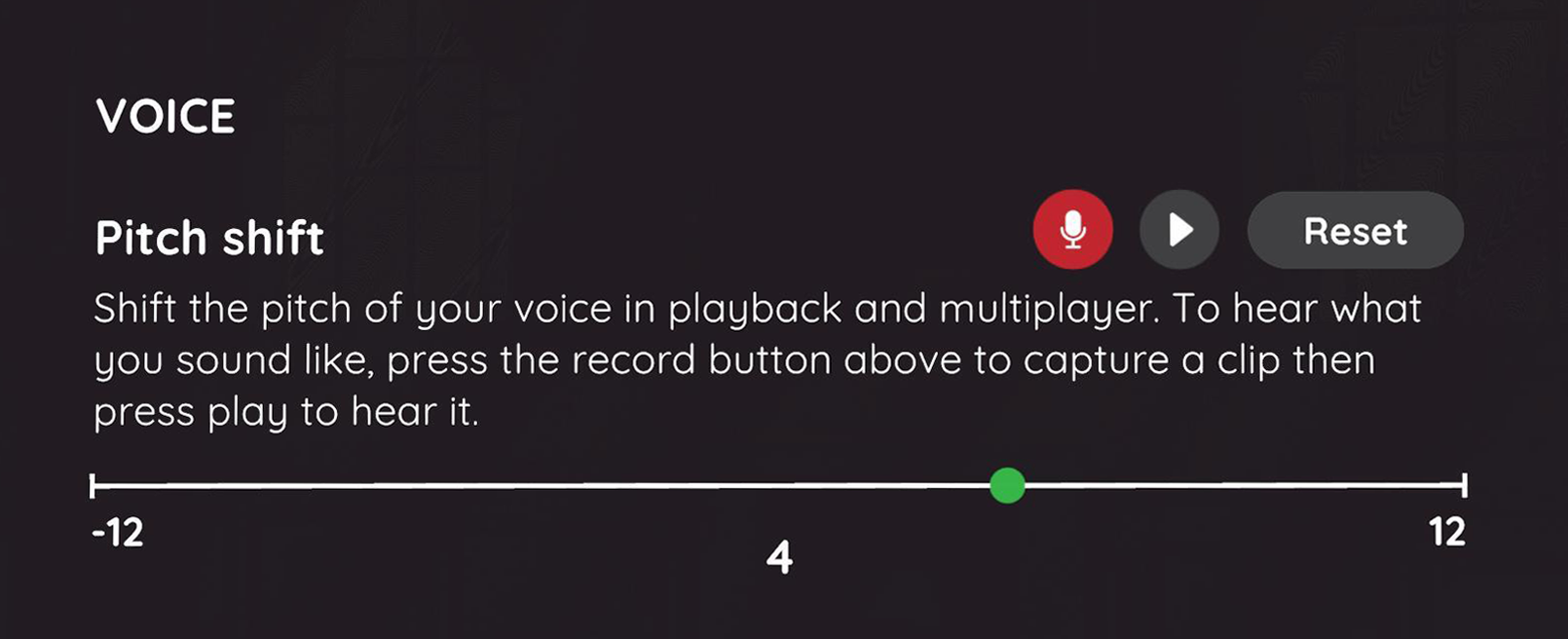

Flipside 1.3 also adds the ability to change the pitch of your voice in your recordings and to others over multiplayer. This is a great way to help you get even more into character, achieve fun effects like making yourself sound like a chipmunk, or just changing up your voice for a little added privacy. Whatever the reason, Flipside's got you covered.

Click on the Settings tab on the dashboard menu and you'll see Pitch shift under a new Voice section of the settings. There's even a fun preview tool to hear what you'll sound like at different pitches.

You now have the ability to add your Meta Quest friends to your list of friends in Flipside, so no need to go finding them in the Multiplayer search. They'll appear in the dashboard friend list automatically too, so your friends are always just an invite away.

Find all of the information from this update here.

Quest Store

Quest Store

Pico Store

Pico Store

It's back-to-school time, and we know that instructors are getting geared up to provide future innovators with the skills they need to succeed. Are you an educator interested in using Flipside in the classroom? Join our educators' mailing list and let us know.

Thanks to everyone who notified us of bugs and shared feature requests! We love the ideas and appreciate when you share your feedback.

CLICK HERE TO FILE A BUG REPORT

We’d love to welcome you to the Flipside Studio Community Discord channel where you can connect with other creators, share your #MadeInFlipside creations, submit feature requests, and share bug reports.

Join the Community

Join the Community

- by Lux

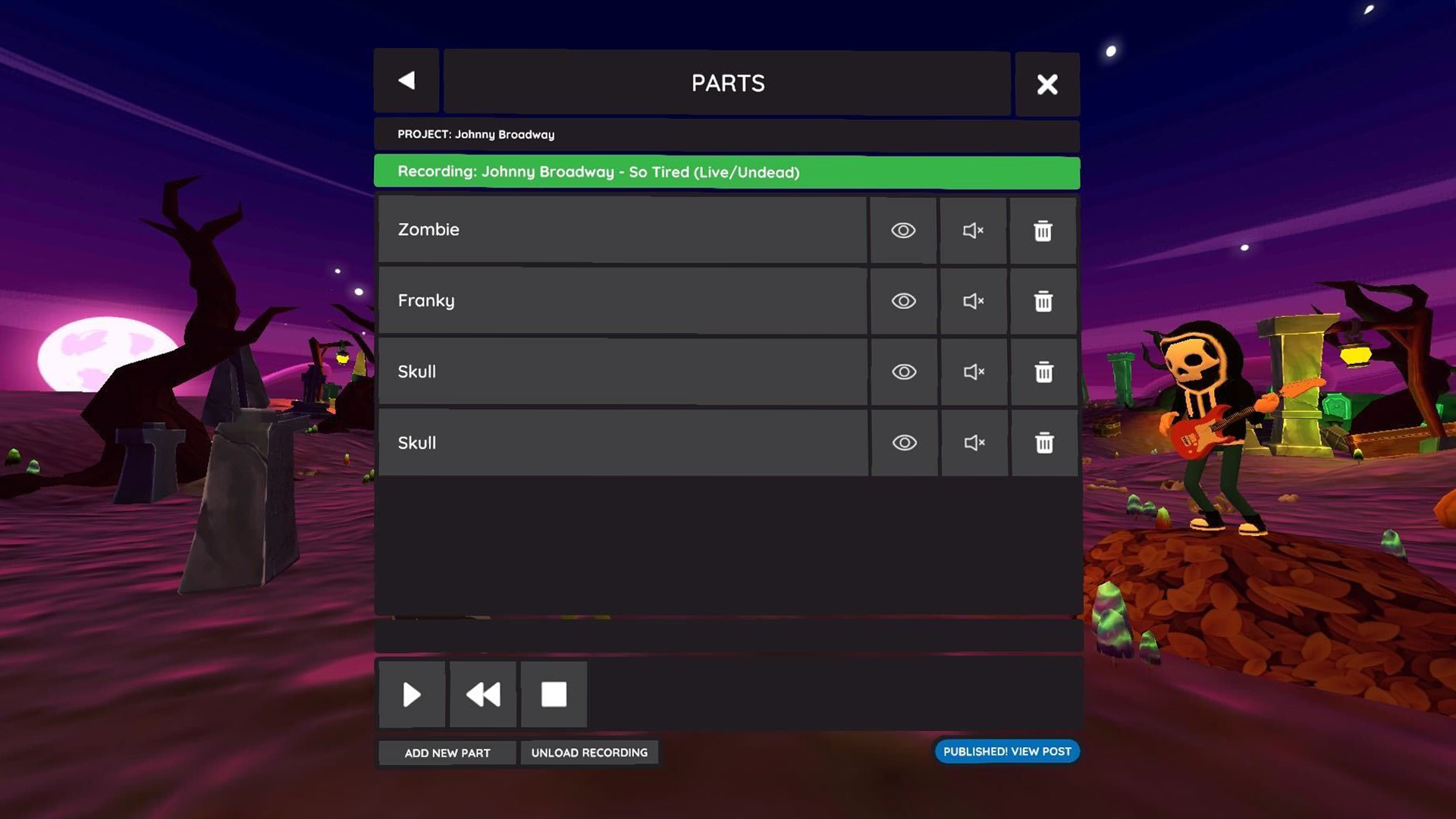

Here's an example VR music video made in Flipside. It's a song called "So Tired" by Flipside's CEO sung by a zombie, who, you can imagine, may be a little tired of his undead existence.

@johndeplume Someone's got a case of the Mondays 🙄 Words & music by Johnny Broadway Music video by Rachael Hosein Watch it in VR on Flipside (https://www.flipsidexr.com) #newmusicalert #newmusic #artistsoftiktok #indiemusic #indie #music #musicvideo #animation #motioncapture #xr #vr #quest3 #metaquest3 #immersive #madeinflipside ♬ original sound - Johnny Broadway

It's also best viewed in Flipside, where you get a front row seat to the graveyard punk performance, so we recommend you hop into Flipside on your Meta Quest 3 or Pico 4 VR headset, but the video is pretty good too.

Here's how to make a VR music video like that for your own songs (or ones generated with AI apps like Suno AI).

The first step is to export the lead vocal stem file from your song. This way you have the original song file and a second file with just the lead vocals.

If you've recorded it yourself, this can be done by muting all tracks except the lead vocal in your DAW and exporting that as a new WAV file.

If you don't have access to the original recording files, you can use the free StemRoller app to separate the tracks for you. StemRoller will separate out the vocals as well as the bass, drums, and other instruments, but for our purposes we just need the vocals.wav file that it generates.

You should now have two audio files:

Log into the Flipside Creator Portal then click on the Audio tab and upload these two files to your private audio collection in Flipside.

In Flipside, you can either record the animated band members together with friends over multiplayer, or record them one after another by adding a part successively for each band member.

One thing to note: There is currently a bug preventing props from being added to a recording after the fact, unless you add it mid-recording but then it will pop in at that time, so any props you need at the start of the recording you'll need to take out and have ready in the initial recording.

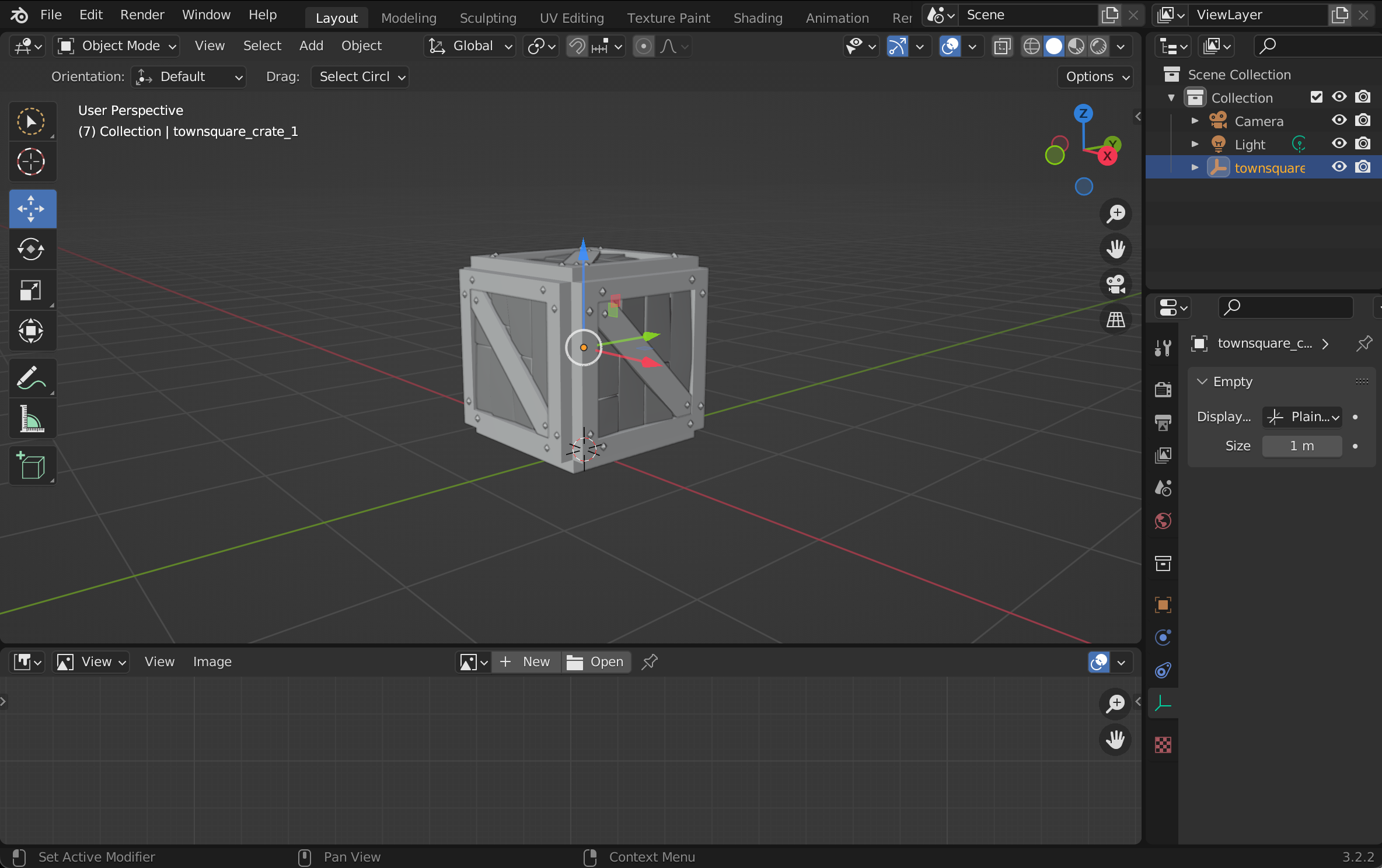

In the Sets menu, choose a set you want the performance to be on, set up your props so they're located where you want and can be easily used when recording the performance itself.

You should now be on set and almost ready to record. Let's start with the lead singer part, assuming you're recording alone.

Go to the Characters menu and choose the character you want to perform the lead. It's also a good idea to calibration at this time, so click on the Calibrate button in the bottom right corner of the Characters menu then stand in a T-pose for a few seconds as instructed.

On the main menu, choose Audio Browser then go to All Audio > Imported. You should see the two audio tracks you uploaded through the Flipside Creator Portal earlier. Click on each one and choose Add to Audio Controller.

Next, click on the audio track that has the lead vocal only and choose Voice from the drop down menu. This will ensure that track powers your lead singer character's voice as you record.

Next, on the Audio Controller, check the checkbox next to each so they're both selected to play in sync.

Lastly, click Play on Record to ensure they'll both start playing automatically at the start of your recording.

Get your character into position to start the recording, grabbing any props you need like the microphone, for example, then on the main menu click Record and wait for the countdown.

With the lead vocal track powering your character's voice, you're free to focus on performing the physical parts of the performance.

When you're finished, click Stop then choose to Keep the recording.

Once the recording is finished being saved, load the recording and click the Mute icon next to the lead singer's part so the lead vocal is no longer heard doubled, since we only needed it to power the mouth movements.

If you're overdubbing parts for additional band members, you can now change characters, get into new positions, and choose Add New Part. Do this until the recording is complete.

If you've made it this far, you probably have a brand new VR music video on your hands which you can publish to your channel using the Publish button when you have that recording loaded.

You've now created a VR music video people can watch and feel like they're right inside the experience.

Have fun!

- by Lux

By Lux (Flipside's CEO & Co-Founder)

When you make art, you create something new out of the resources and materials at your disposal. Paper, pencil, pen, paintbrush. Hands, hips, feet, voice, instruments. Computers. Controllers and headsets. Software.

Some materials are repurposed from other things, or intended for other purposes. Some art uses only your own body, like dancing or singing. Some artists use very precise tools, like a saxophone or a violin. The range of what's possible to make art with to create with is almost limitless.

Software makes up some of the most sophisticated tools artists have ever had available to them. From Ableton Live for music to Photoshop for images, Final Cut for video, Flipside for spatial and, more and more, AI is influencing each of these areas too.

This is a wide and varied category of software called creation tools made for the express purpose of helping others create.

The rest of this post is going to focus on software creation tools, since that's what we make. I believe software creation tools have the potential to influence the art that's made with them in more ways than physical tools have, which makes asking questions about the nature of creation tools important for developers to do.

A question I try to think about from time to time is, what does it mean to make tools for others to create with?

What are the implications of this question? What are the values that arise from a given position on it?

When you make software that helps others make something that's theirs, it comes with a certain responsibility. You're supposed to get out of the way and try not to influence how it looks and feels or sounds, because it's not about you it's not your art it's about assisting their creation.

A creation tool maker makes the invisible stuff, the glue that holds together the seams of a digital creation. That's our mission in a nutshell.

There's a responsibility in holding someone else's creative work in your own creative hands and not inadvertently dictating what the end result should be. User-generated content should be made by the user, but default art assets, default settings a user doesn't know they can change, feature choices and limitations, and even workflows built into how you use an app, all influence the things that can be made or the direction users typically go creatively within a creation tool.

There are also limitations that may influence the work no matter what you do, like the 8-bit sounds of early video game consoles, or early monitors with only 256 colours. In our case, rendering for VR is more costly than rendering for mobile or desktop, so we choose default art styles based on what will still look great but perform well on VR headsets, and so we tend to steer away from realism in our default choices as a result, knowing this will have some influence on our creators and their output.

Our tools are inherently going to have some influence on the art we make. As a tool maker, being aware of that is important in order to understand and choose carefully to the best of our ability what that influence is going to be.

Some of it is temporary, since software is always in flux, and necessarily includes temporary limitations that become more flexible or smoothed out over time. Developers have the eternal struggle of knowing that what's around the corner is going to make X or Y feature better, but that users have to wait and live with the limitations that exist today.

I touched on feature design, workflow choices, and choosing default settings earlier, and these are hard problems even for experienced developers. User experience is ever-evolving, especially in areas like VR that are still not fully charted territory.

A developer must understand that when you choose a default setting, most users are unlikely to ever change it. So if a capability is only exposed by changing that setting, it may never be discovered or used by the vast majority of creators using your tool.

Similarly, a workflow that has a default path going from steps A to B to C, but you can choose to "See More Options" which might open up steps D and E, will influence all but a small percentage of creators to stick to the A-B-C path. Creators are busy and they don't have time to crawl through every possible option in your software when they're just trying to get something done, so it's important to choose default paths carefully and know where you might be exposing or hiding certain features.

When a new user first starts using your creation tool, they'll need guidance on what to do to accomplish what they want to make with it. Contextual tool tips are great for explaining things in the moment, and can even be used to highlight important elements in the user interface. Tutorials and references accessible within the app can be great resources, but often become overwhelming in the amount of documentation that there is, or forgotten and not used because they're often implemented out of context.

Onboarding tutorials are a great way to walk the user through a core set of features or recommended workflow and ensure they successfully perform each of the steps. This can be a very powerful way to help a user through their first few steps with your app, but remember, you're teaching a certain workflow that users are unlikely to deviate from unless they become power users over time or happen upon other, less visible features.

After the initial onboarding, the user needs to feel confident creating something with the app. This is where guides and templates come in. They need to create something, but not necessarily their thing, yet.

Templates, or in our case, default characters, props and sets, can be a great way to get users going quickly by reducing the choices they have to make in order to get to that first creative reward. But even then, a user can hop into character and not know what to say. So we introduced an AI script generator that will write you a monologue, a dialogue, a poem, or a bunch of awful dad jokes.

Each of these work together to reinforce the creative flow when a user is still new to the tools and doesn't have that freedom of familiarity to just hop into being creative. This is central to our thinking on how AI fits into Flipside as a creation tool. We don't see it as a replacement for human creativity, but rather, as an assistant that can jump in to grease the wheels where needed.

There are way more areas we think about leading users and balancing that against leading them too far, and all the other questions I asked earlier in this post, but this post would be much too long if I were to include them all.

We believe creation tools are an important category of software, and have dedicated our lives to making the first generation of creation tools for VR, AR, and the metaverse. We believe this work is important for several reasons:

Flipside's core mission is to help everyone bring their imagination to life and share it with the world. That's a tall order, but Flipside has lowered the barrier to content creation in what is one of the most challenging areas of technology, and most importantly, we've facilitated the creation of thousands and thousands of pieces of content - sketches, jokes, dances, music, reenactments, improv, and more - that otherwise wouldn't have existed.

We are honoured to be building Flipside for creators like you, and everyone that has joined us on this journey, and thank each and every one of you for believing in and wanting to build this crazy new world of spatial entertainment along with us.

- by Lux

DesignRush recently spoke with our CEO & Co-Founder John Luxford (aka Lux) about how brands can leverage user-generated content (UGC) in the metaverse.

Read on for Lux's perhaps contrarian views on what the metaverse is, how it's evolving, what role VR and AR play in shaping its future, and how brands can take advantage of these immersive technologies.

Read the full interview here: Learn How Brands Should Leverage UGC in the Metaverse.

- by Lux

By Lux (Flipside's CEO & Co-Founder)

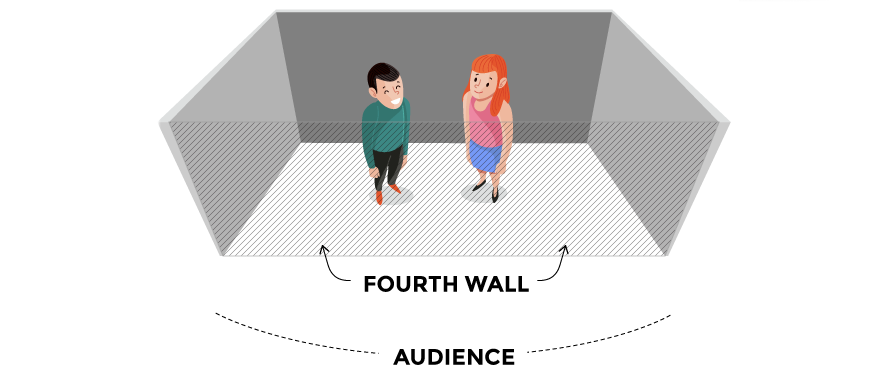

The fourth wall is a concept in traditional film and theatre which is essentially the boundary between the performers and the audience.

While there are exceptions, like theatre in the round, in most styles of theatre the audience is looking at the performance from the front of the stage. This is also known as a proscenium, in theatre lingo. In film, this would be the camera itself.

| Breaking the fourth wall happens when an actor deliberately addresses the audience, speaking directly to them, or speaking directly into the camera. This was popularized in television shows like BBC's The Office, where actors would turn and look at the camera as if conferring with the audience that something absurd just happened. This can be used as a way to increase engagement with the audience, add emotional impact, create a memorable moment in the experience, and even make the audience feel like part of the show. |

In immersive theatre, there's no obvious fourth wall, yet there's still an audience whose perspective needs to be managed throughout the performance.

Some virtual worlds have obvious fourth walls because they mimic real-world theatres or stages. Flipside has a number of these, from the black box theatre, comedy club, outdoor stages, or our newest Laughs N' Riffs stage. In these cases, it's obvious where the audience will be and that the actors should make sure to craft the experience for that perspective.

Others, like our kitchen, class room, or campfire sets have no obvious fourth wall, but one must still to be added to the environment so that performers will know where the audience is expected to be.

An added question to be asked in crafting an immersive experience is whether the audience is participating in the story or simply watching, and whether this can be used to increase the sense of immersion or potentially take away from it.

In immersive worlds, audience comfort is also a factor to consider. Some audience members may not be comfortable having to engage, just like they may not want to sit in the front row of a stand-up comedy show for fear of becoming part of the show.

All these considerations are important in deciding how you want to craft a piece of immersive entertainment.

In immersive entertainment, there are two ends to the spectrum of breaking the fourth wall:

Between these ends are other approaches such as giving a knowing nod to the audience but not engaging verbally, and many other ways we have yet to dream up. Immersive performance is a ripe ground for innovation.

There are also examples of breaking the fourth wall for specific purposes, such as:

We think a lot about the fourth wall in Flipside. Our team has many discussions and plans for evolving the audience's perspective and experience. We look forward to exploring this subject with Flipside's community of creators as Flipside continues to evolve.

Today, when you load a set to record a post, Flipside shows an "Audience" marker where the audience members will spawn when loading the post to watch. This is the first step to something more full-featured that we're working on fleshing out in a variety of ways, such as:

One key challenge to overcome with moving the audience between positions or giving them vehicular controls is simulator sickness. While we already have features like vignetting of the user's POV when walking around in Flipside or rotating the world while invisible, this needs to be considered in any implementation of audience movement as well. For example, preventing the rails experience from tilting the audience position while moving it between point A and point B, but only allowing the creator to adjust the yaw but not the roll or pitch.

There's also the challenge of watching content in mixed reality versus fully immersive virtual reality. The audience in this case may often be arranged more like the audience in theatre in the round except the content would likely need to be scaled to fit the room instead of being life sized, and the same piece of content in Flipside may end up being viewed in both of those contexts.

Another consideration for non-headset-wearing audiences and how we might enable audience members to become avatars within the content or whether we enable them to drop and control virtual cameras in the scene so they can feel like the director of the show.

Each of these is a powerful way to engage audiences and ultimately, our job is to empower creators to craft the experience for all audiences in such a way that makes for a great experience for each of them. It's a tall order, but we've got more than a few tricks up our sleeves that we'll be introducing over time.

- by Lux

By Lux (Flipside's CEO & Co-Founder)

There's an interesting effect in technology where platforms align themselves with certain terminology in order to try to differentiate from one another. But eventually, most companies converge on common words. Web 2.0, web3, cloud computing, edge computing, the list goes on.

In virtual reality (VR) and augmented reality (AR), these terms were coined long before the technology was ready for an industry to form around them. Then came the Oculus Rift which was dubbed a VR headset. But it could have just as easily been called a VR visor, or the more technical head-mounted display (HMD) that people sometimes use.

Then Microsoft came along and coined mixed reality (despite the fact that mixed reality meant something already) and branded their VR platform Windows MR. Other companies tried to coin a term that would encompass both AR and VR and came up with XR, as in extended reality.

Now Apple has entered the fray with the Apple Vision Pro (AVP) and is calling it a spatial computing device. They even go so far as to discourage app developers from referring to either AR or VR when describing their AVP apps.

Windows MR has since been abandoned, and many companies continue to use XR, but the influence Apple has on shaping industry perception is massive, so my prediction is that we'll all eventually converge around spatial computing, unless they pivot to something else.

But when talking about VR experiences, the word spatial leaves something to be desired. While yes, it does suggest a blend of both AR and VR, there's another word that I think might be lost in the shuffle: Immersive.

You see, spatial refers to the three-dimensionality of what's displayed, but immersive refers to the sensory experience of the user. One is technology-centric and the other is user-centric. Which is why you don't hear about spatial theatre groups, but you do hear about immersive theatre groups, and when users describe an experience they just had, they don't say how spatial it was, they say they felt immersed in it.

This split makes me think of Simon Sinek's TED talk about how people don't buy what you do, they buy why you do it. The word immersive touches on a why. Spatial just describes a piece of technology. Which is odd for Apple, who are the original company to say ay ahere's to the crazy onesss in their Think Different campaign.

Immersive speaks to one of the values of the experience the user has, and the implied belief that immersion offers something to the user experience that non-immersive content can't offer.

But we usually think of immersion as a VR thing, and not an AR thing. I think there are degrees of immersion, and AR still meets several of them. When you lose yourself in the experience, that's immersion, whether you can see the edges or a peek of the man behind the curtain.

In AR terms, this immersion can range from creating the effect that there are portals you can peer through in your room's physical walls, or the effect of of oskinningss your reality. Imagine an AR skin that makes your world look like Sin City, or The Walking Dead - that would surely be pretty immersive and yet is only augmenting your real world experience.

Projection mapping has become a common technique used by VJs to make stages and walls at festivals and raves feel more immersive, and a similar effect is employed by installations like the Van Gogh: The Immersive Experience. There are even immersive audio experiences like Darkfield Radio that immerse the user without using any visuals at all.

The key to immersion is helping the user get lost in the experience. When you lose yourself in something, you lose your sense of time, you forget about your outside cares, you take things in more fully, and you leave having had an experience that may have awed you or moved you in some way that you felt you were a part of. That's the magic of of ospatial computingutingu, not the technology.

- by Lux

It's been one year since we officially launched on the Meta Quest app store, and what a year it's been!

Our user base has grown to over 50,000 creators who've made over 160,000 spatial recordings. We're sincerely grateful for every one of our users as well as the positive reviews and messages of encouragement you've sent us. Those words and the content you're making and sharing keep our team motivated every day to make Flipside the best we can make it, so thank you from the bottom of our hearts.

Here are some of the highlights we've had over the past year:

Since launch, we've released 14 software updates, including some major new features including:

We've substantially expanded the list of built-in characters, props, and sets to now include hundreds of options.

We launched with a library of 55 characters, 49 sets, and 330 props. We now have 111 characters, 69 sets, and too many props to count.

And of course, you can always create custom characters through our Ready Player Me integration, custom sets with our Blockade Labs integration, or use our Flipside Creator Tools plugin for Unity to import your own custom characters, sets, and props. Sky's the limit!

We've expanded beyond the Meta app stores to include support for Pico 4 headsets too.

We've changed the name of our app from Flipside Studio to simply Flipside, emphasizing that Flipside is now a metaverse social media platform for next-gen creators to be able to share their spatial recordings directly with their fans.

Our vision is to build a metaverse powered by imagination, and to democratize the creation of spatial and immersive content so that anyone with an idea can bring that idea to life and share it with the world.

We believe this is the missing piece of the metaverse, enabling real-time content creation so that you just act things out and they're instantly ready to share.

Read more about this new direction here

As more and more creators publish to Flipside, we want to highlight some of our favourites here for you.

- by Lux

By Lux (Flipside's CEO & Co-Founder)

What if you were to combine social media with a virtual TV studio? The results would be a social media platform focused on pure imagination and hyper creativity. Sounds crazy, but it makes total sense in the metaverse.

And that is exactly what Flipside is, the first social media platform built on a foundation of pure imagination. You can think of it as the TikTok of immersive entertainment, or like stepping inside of a content creator’s mind.

Because the thinking that’s gone into existing metaverses is too limited to the original vision of what a metaverse is, which makes great fodder for science fiction, but isn’t a complete vision of what that could look like. It’s missing real-time content creation at the heart of it all, which is exactly where Flipside comes in.

Spatial computing empowers a level of real-time content creation never seen before. Instead of animating frame by frame, or building interactivity in a game engine, now you just become a character on a virtual set, and your words and movements become the performance. When you press save, the recording is done. That fast.

We’re seeing the emergence of what we call next-gen creators. Creators who are XR native and spatial first. Who feel perfectly themselves embodying an avatar and who understand that identity is something that can shift and morph even from moment to moment. Who step through virtual worlds as fluidly as stepping through a door. The inventors and discoverers of what’s possible in this new spatial computing paradigm.

And we know audiences have been dreaming of the idea of being able to jump inside of the content they’re seeing, from Mary Poppins and gang jumping inside of a chalk drawing, or Alice falling down the rabbit hole long before that.

So here’s our tribute to next-gen creators and their fans. We’ll be there watching your creations and celebrating the vision you bring to the birth of a new space for imagination and endless creativity.

Welcome to Flipside.